I am a Lecturer (Assistant Professor) in Machine Learning and Computer Vision at the School of Engineering, University of Edinburgh. I co-lead the Bayesian and Neural Systems research group.

My research interests include:

- simplifying machine learning

- AutoML, especially neural architecture search

- efficient network training

- low-resource deep learning

- engineering applications of machine learning

I have an MEng in Engineering Science and a DPhil (PhD), both from the University of Oxford. My DPhil was on "Visual recognition in Art using Machine Learning" with Andrew Zisserman in the VGG group. After my DPhil, I was a postdoc at the School of Informatics in Edinburgh with Amos Storkey.

I hold an EPSRC New Investigator Award and I am an investigator on the dAIEdge Horizon Network.Team

I am principal advisor for:- Dr Linus Ericsson (Postdoc) who is exploring the fundamentals of neural architectures

- Chenhongyi Yang (PhD student) who works on 2D and 3D visual recognition

- Miguel Espinosa (PhD student) who works on engineering big models for earth observation

- Shiwen Qin (PhD student) who works on efficient training of LLMs

News

- July 2024. Our PlainMamba paper was accepted to BMVC 2024.

- July 2024. Amos Storkey and I are recruiting a postdoc (2 years) in Continual Machine Learning at the Edge. Please contact Amos with any informal enquiries at amos+daiedge *at* inf.ed.ac.uk.

- July 2024. Our pose estimation paper was accepted to ECCV 2024, and our transformer-based Bird's-Eye-View 3D detection paper was accepted to IROS 2024

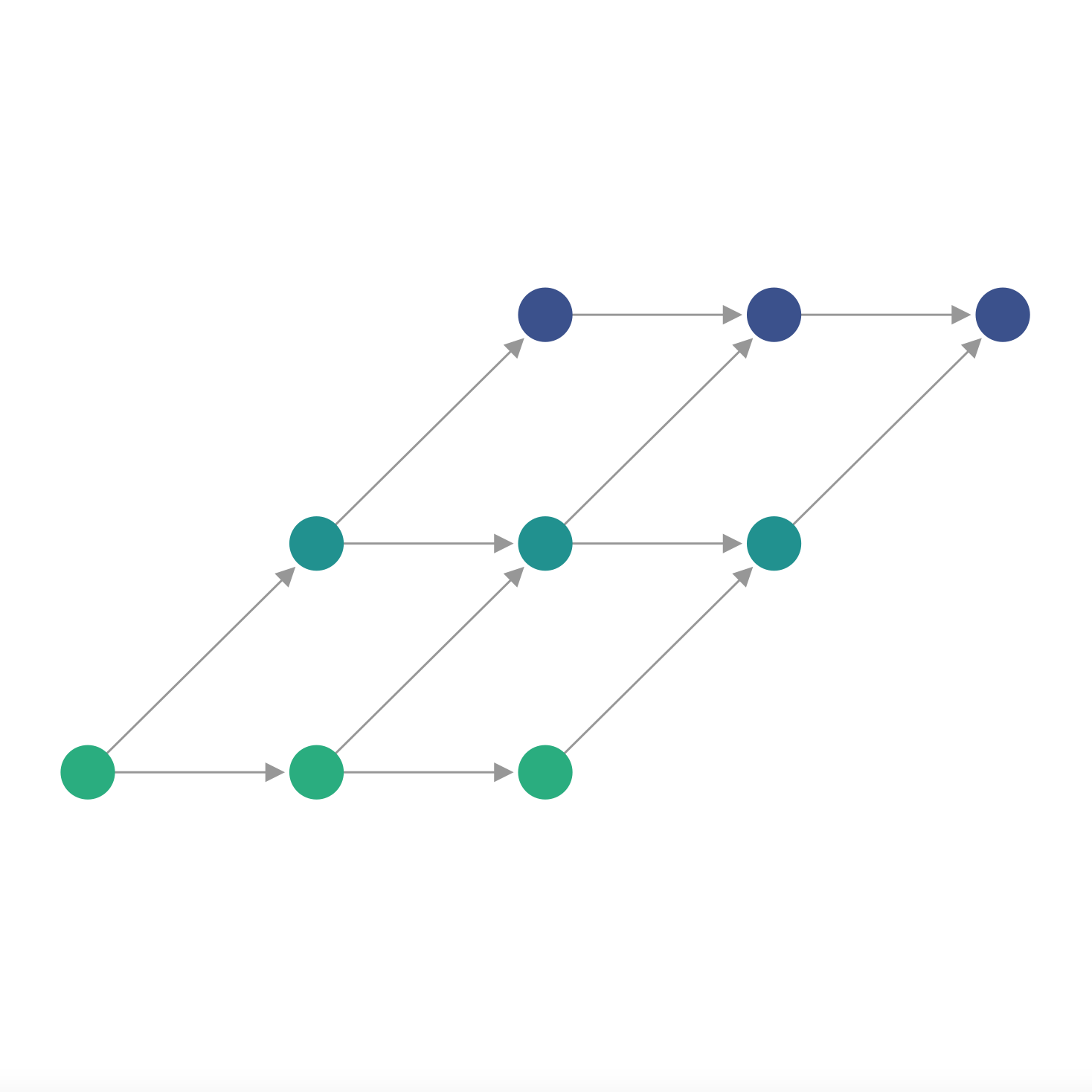

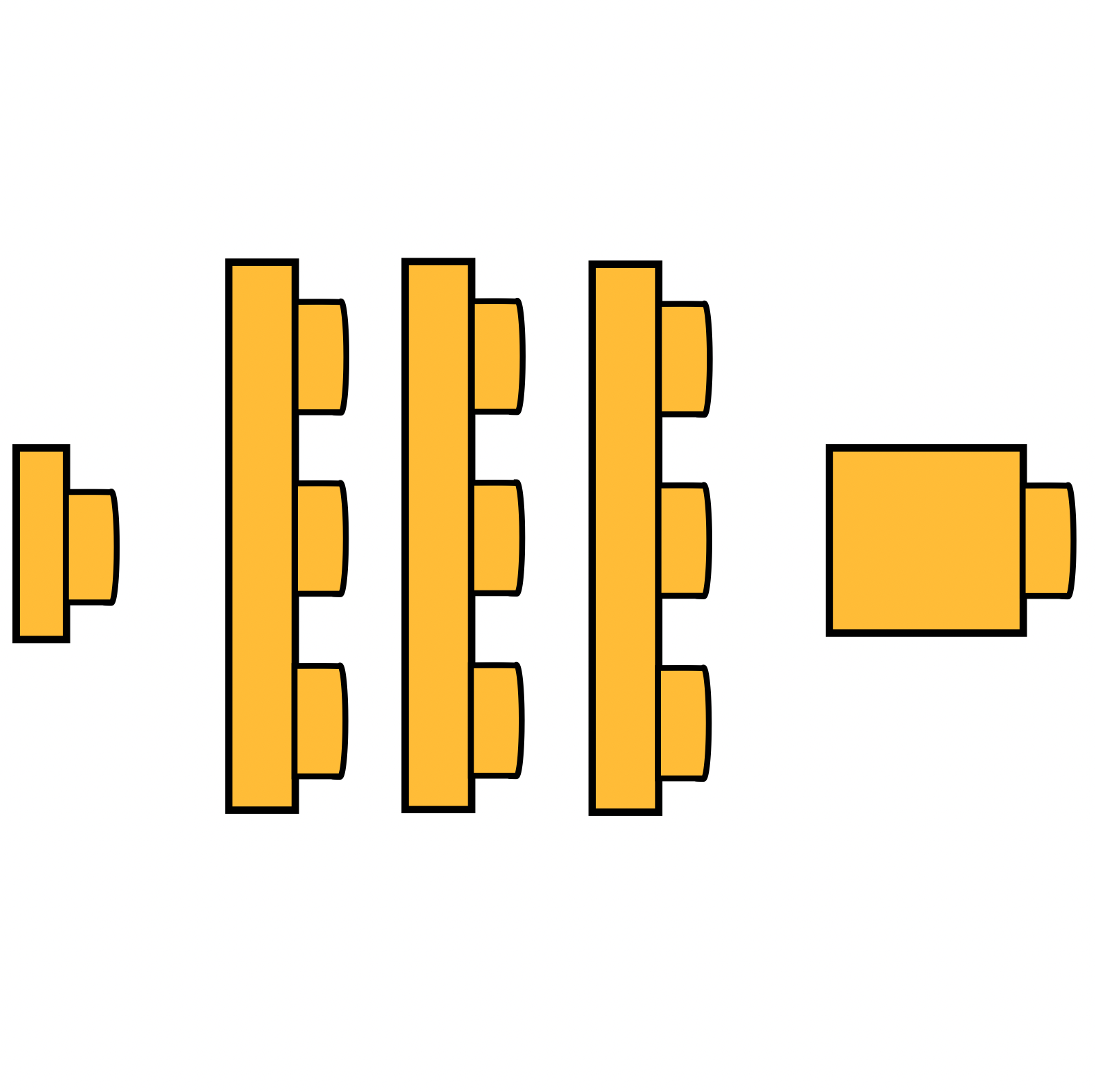

- June 2024. We have created einspace: an expressive search space for NAS. For a high level intro and an interactive architecture visualiser, see the project page.

- April 2024. More preprints! A strong, simple baseline for egocentric 3D pose estimation and an investigation into HPO for continual learning.

- March 2024. We have joined the Mamba bandwagon (Mambwagon?) in our latest preprint!

- February 2024. Chenhongyi's paper on active learning for object detection was accepted to CVPR 2024

- February 2024. I will be a co-investigator on the EPSRC AI Hub for Causality in Healthcare AI with Real Data (CHAI)

- January 2024. I have been nominated for an EUSA Teaching Award

- January 2024. I am co-organising this year's NAS Unseen-Data competition as part of AutoML 2024

- January 2024. I am co-organising the Fifth Workshop on Neural Architecture Search at CVPR 2024

Selected Publications

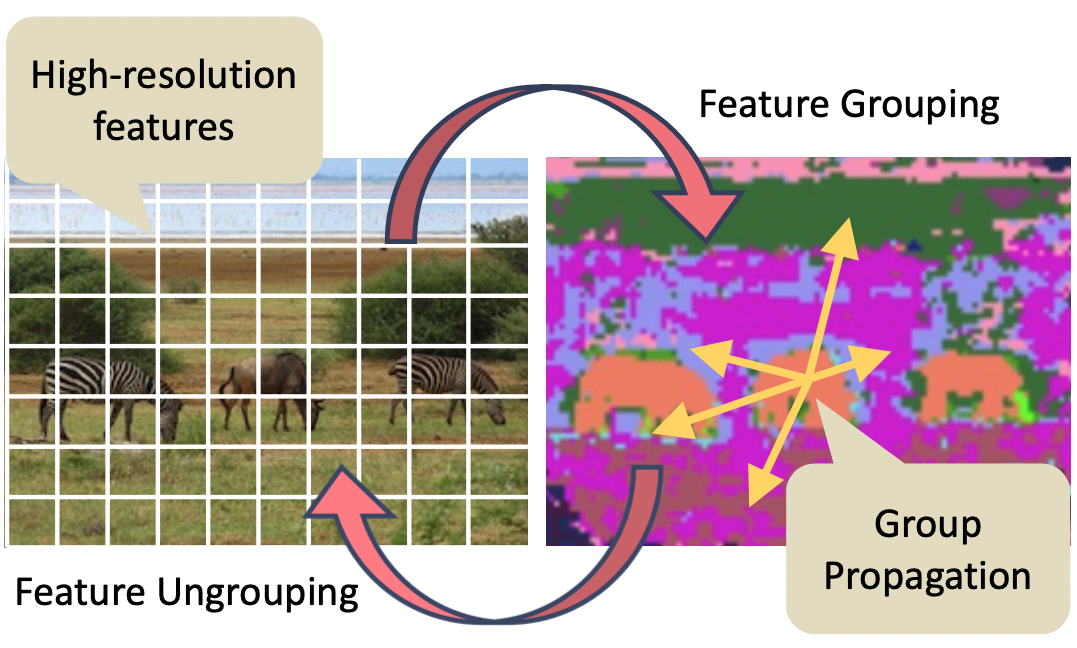

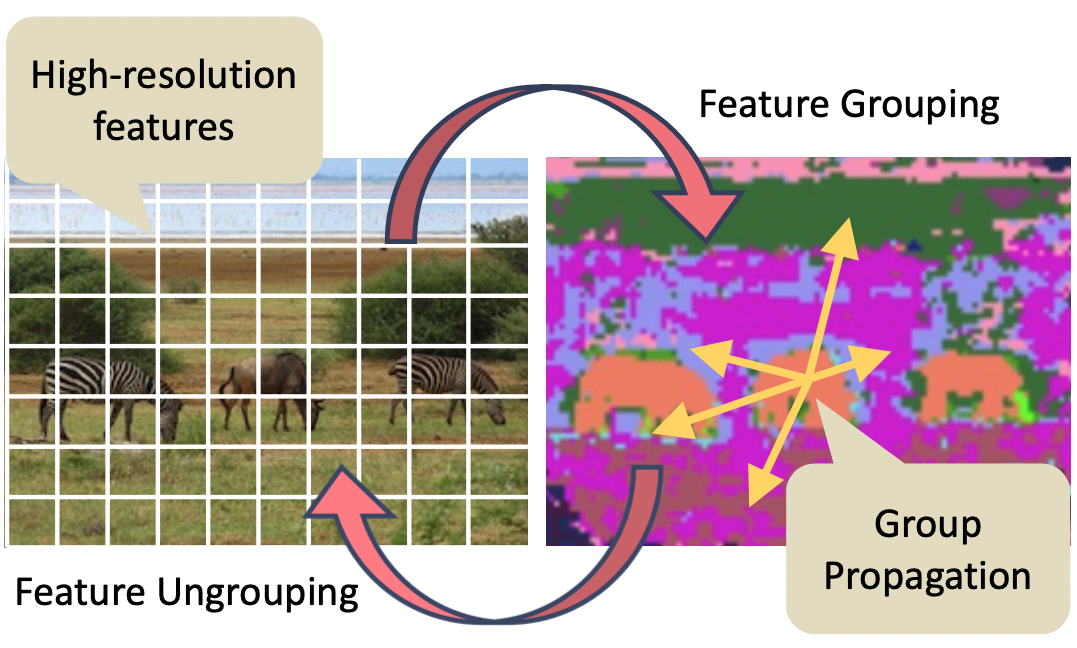

GPViT: A High Resolution Non-Hierarchical Vision Transformer with Group Propagation

ICLR 2023 (Accepted as a notable paper)

Chenhongyi Yang*, Jiarui Xu*, Shalini De Mello, Elliot J. Crowley, Xiaolong Wang

A new vision transformer architecture that serves as an excellent backbone across different fine-grained vision tasks.

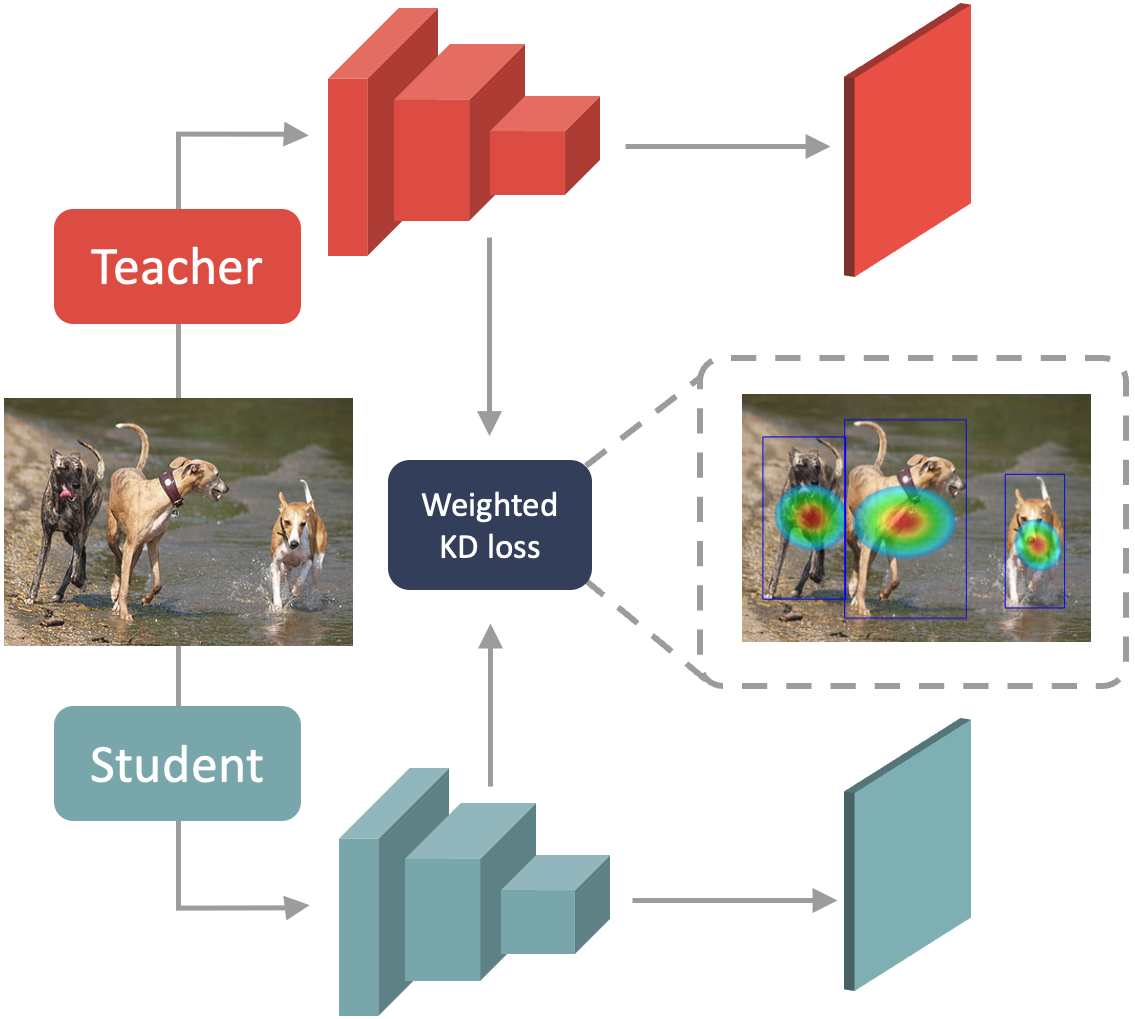

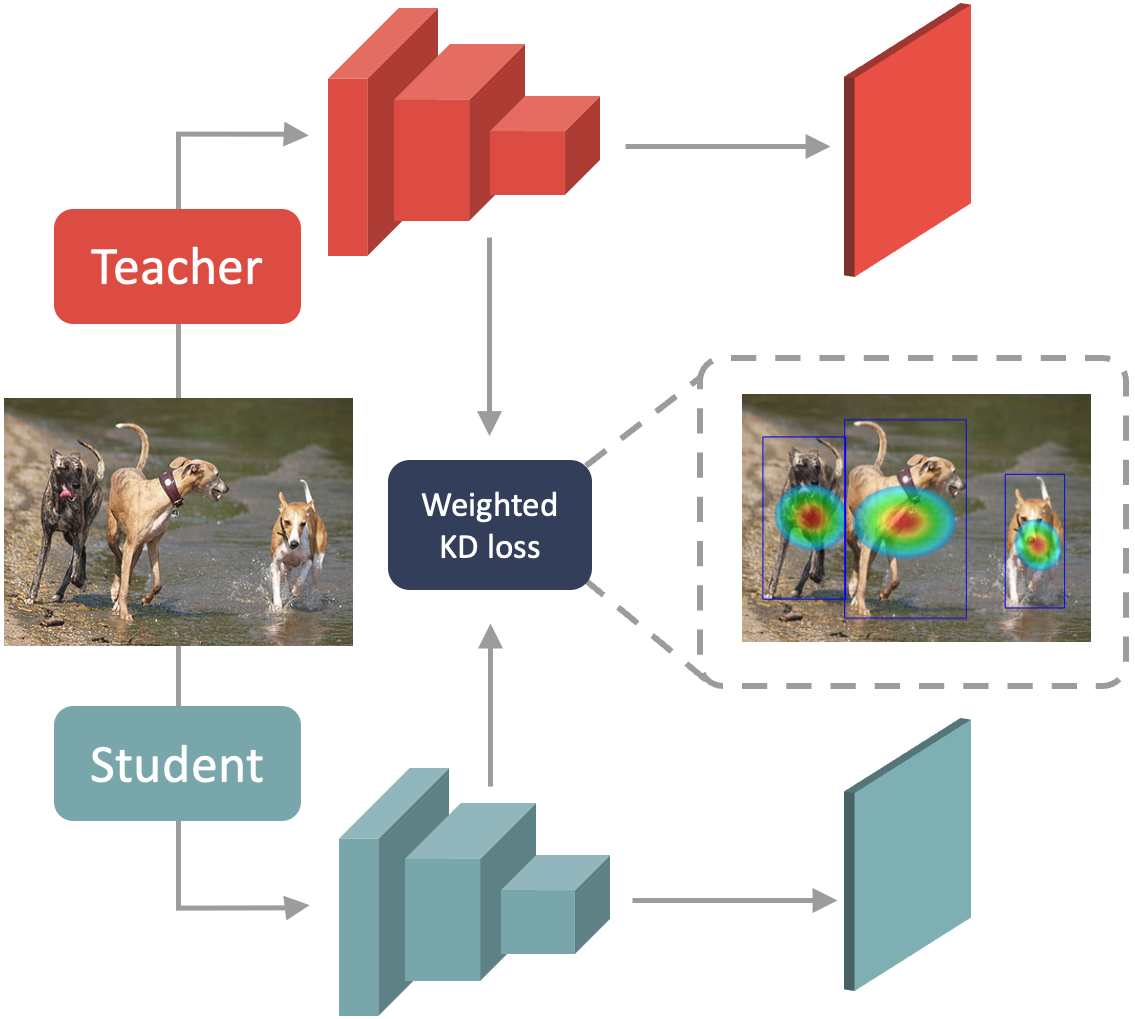

Prediction-Guided Distillation for Dense Object Detection

Chenhongyi Yang, Mateusz Ochal, Amos Storkey, Elliot J. Crowley

A knowledge distillation framework for single stage detectors that uses a few key predictive regions to obtain high performance.

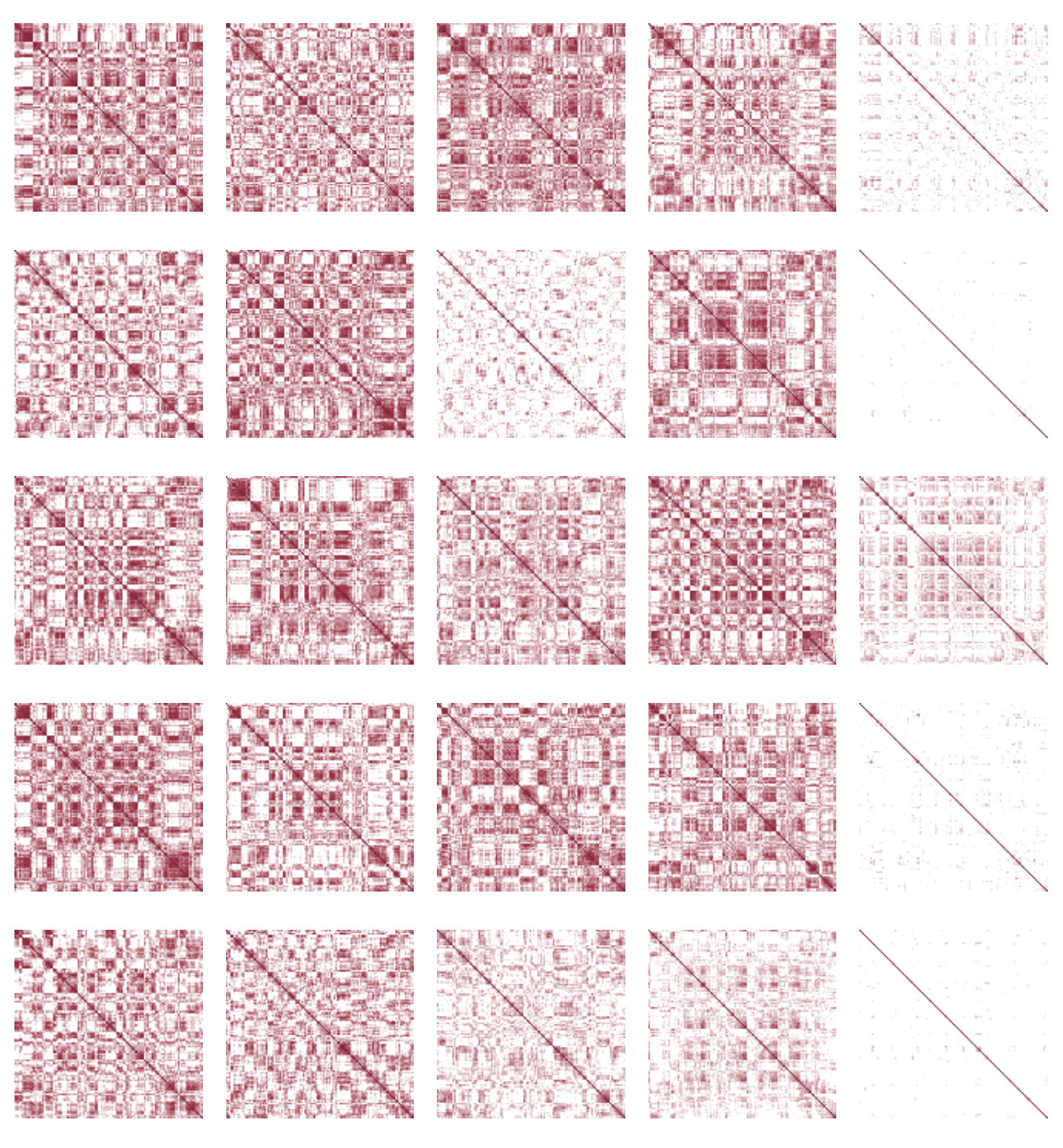

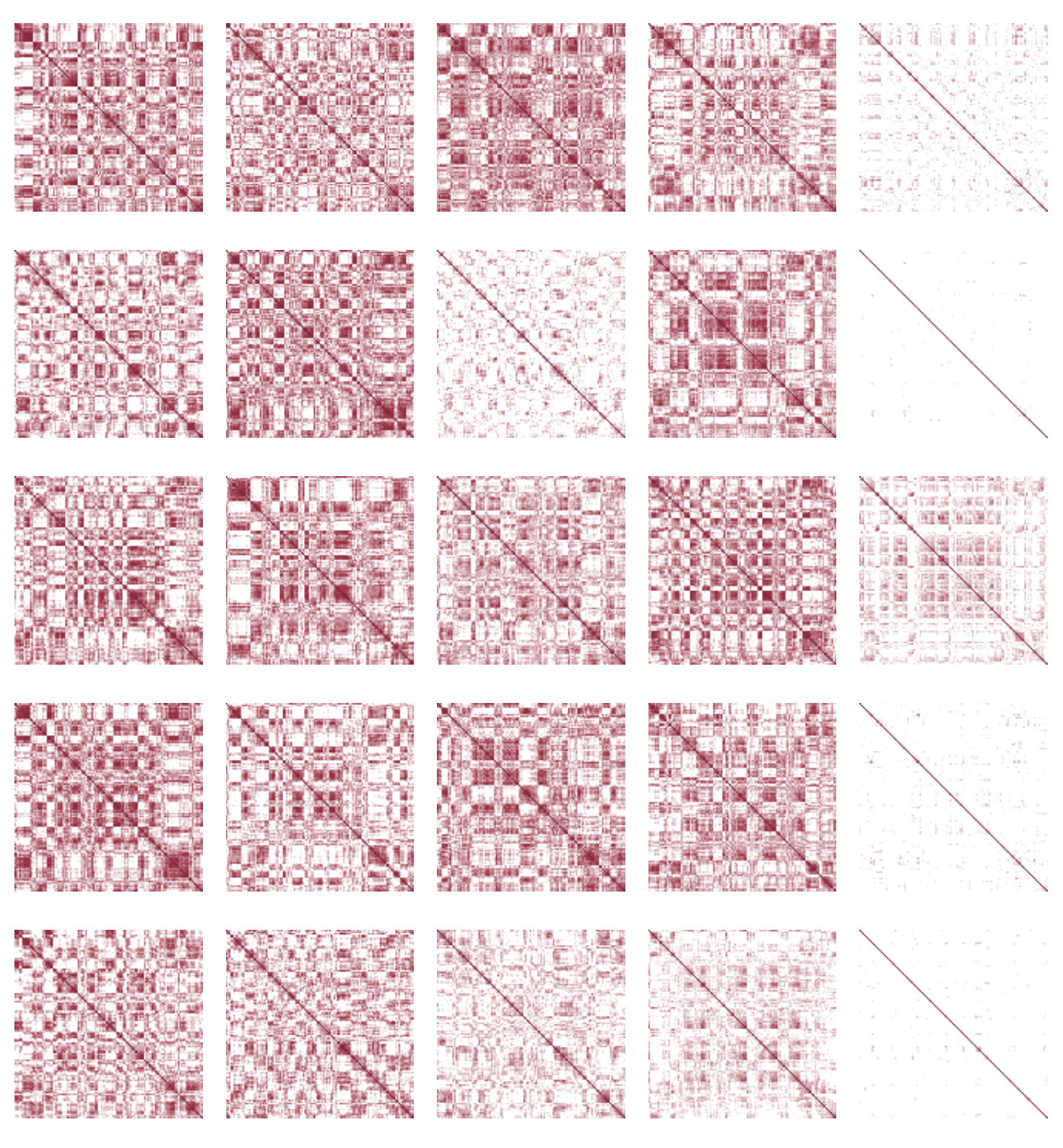

Neural Architecture Search without Training

Joseph Mellor, Jack Turner, Amos Storkey, Elliot J. Crowley

A low-cost measure for scoring networks at initialisation that can be used to perform neural architecture search in seconds.

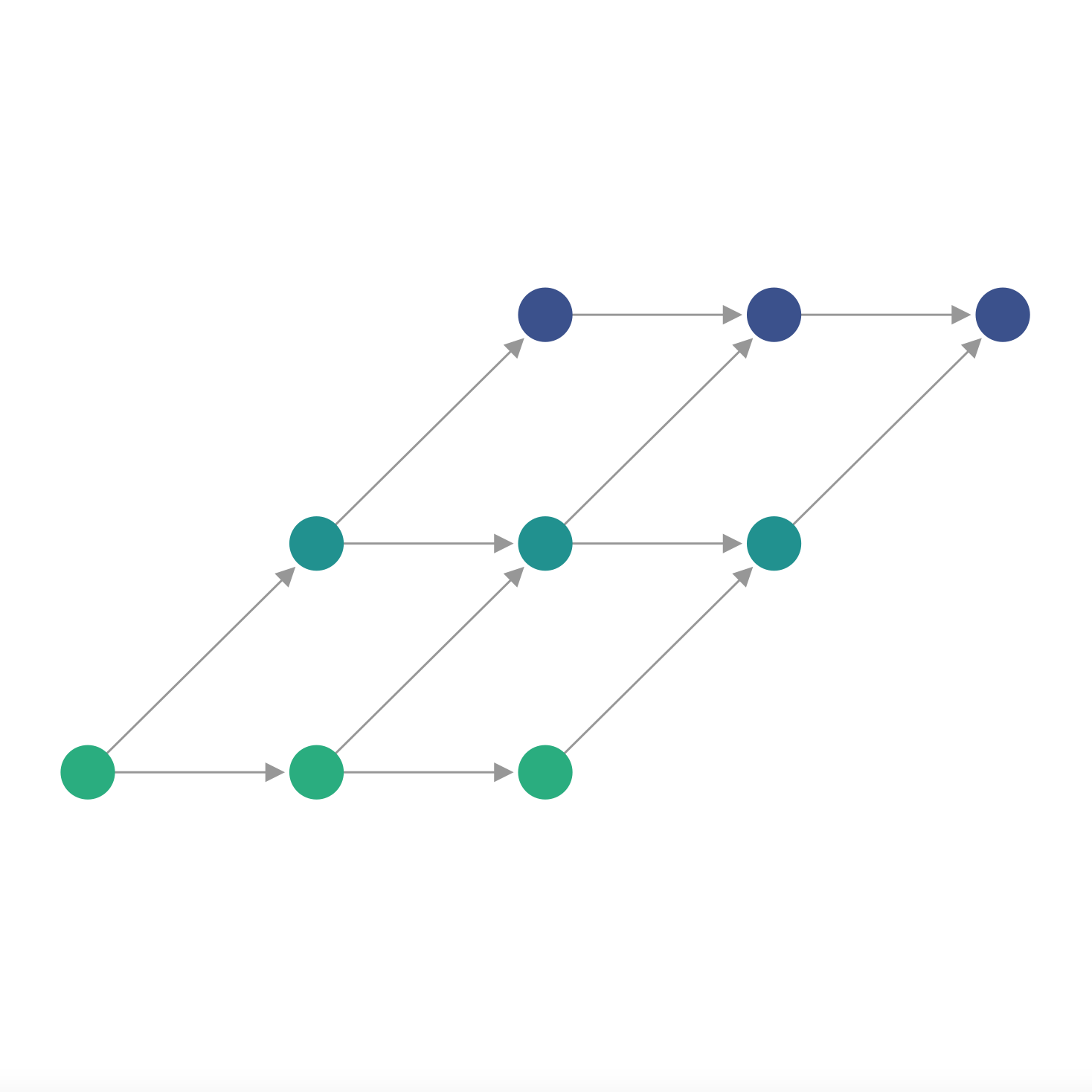

Neural Architecture Search as Program Transformation Exploration

ASPLOS 2021 (Distinguished Paper)

Jack Turner, Elliot J. Crowley, Michael O'Boyle

A compiler-oriented approach to neural architecture search which can generate new convolution operations.

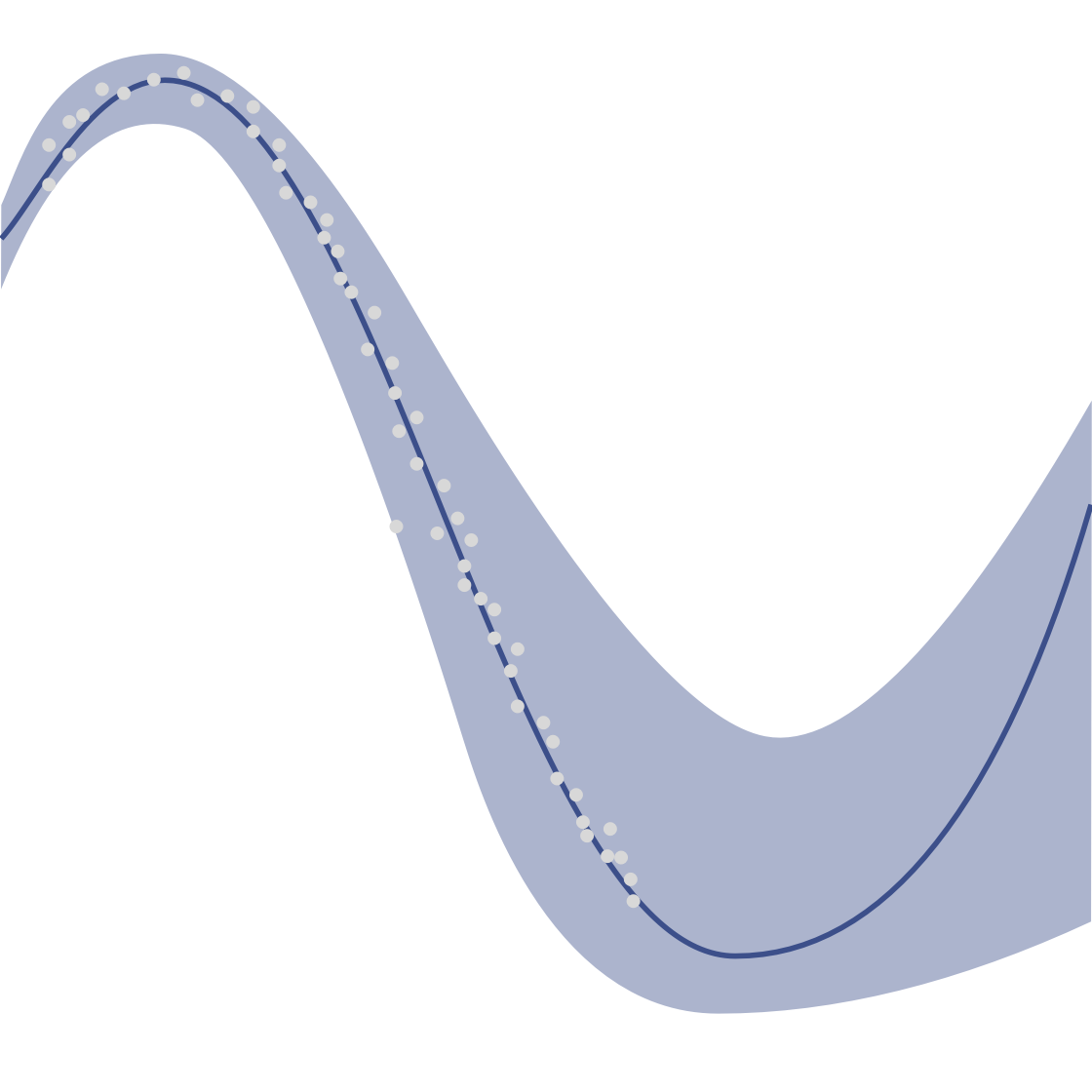

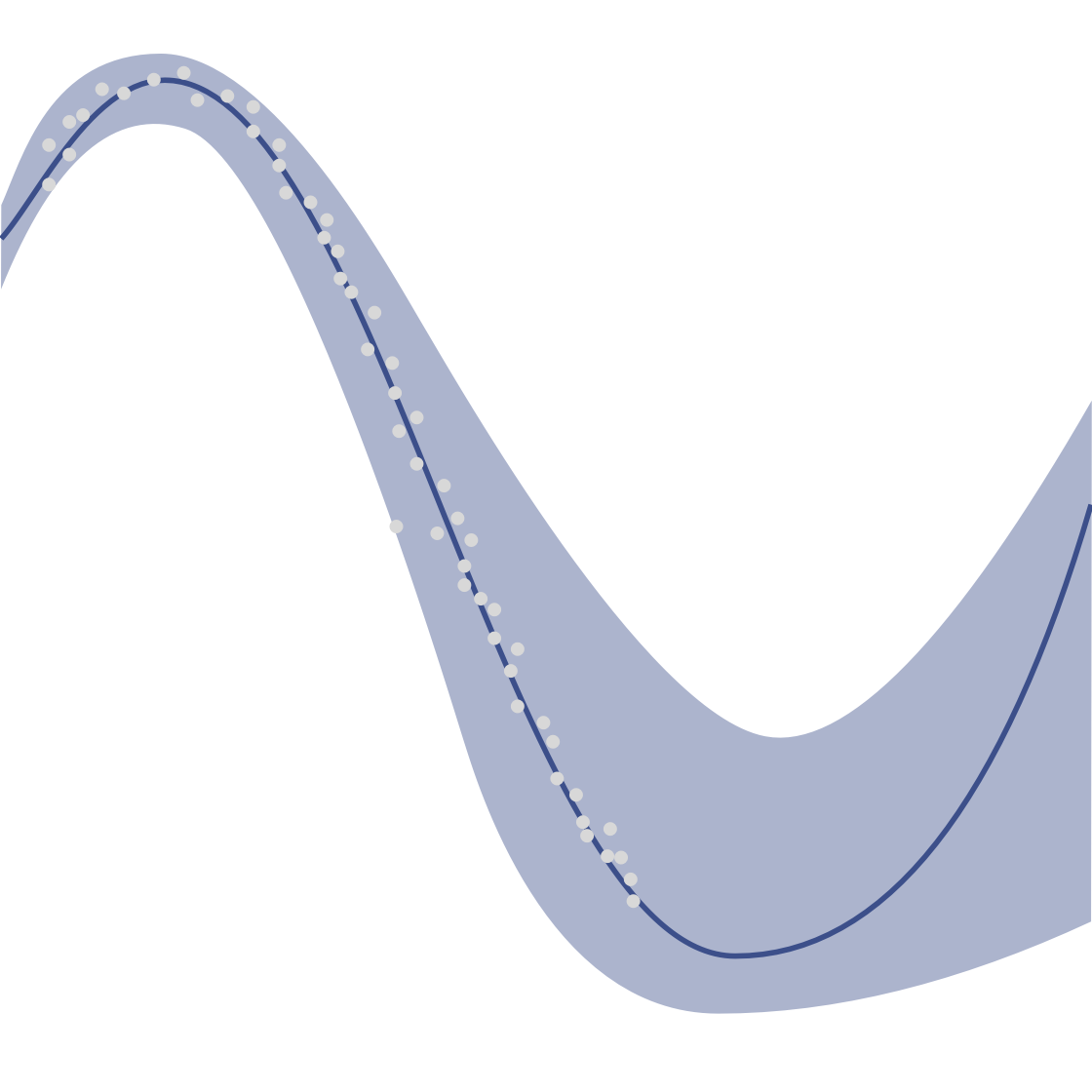

Bayesian Meta-Learning for the Few-Shot Setting via Deep Kernels

Massimiliano Patacchiola, Jack Turner, Elliot J. Crowley, Michael O'Boyle, Amos Storkey

A simple Bayesian alternative to standard meta-learning.

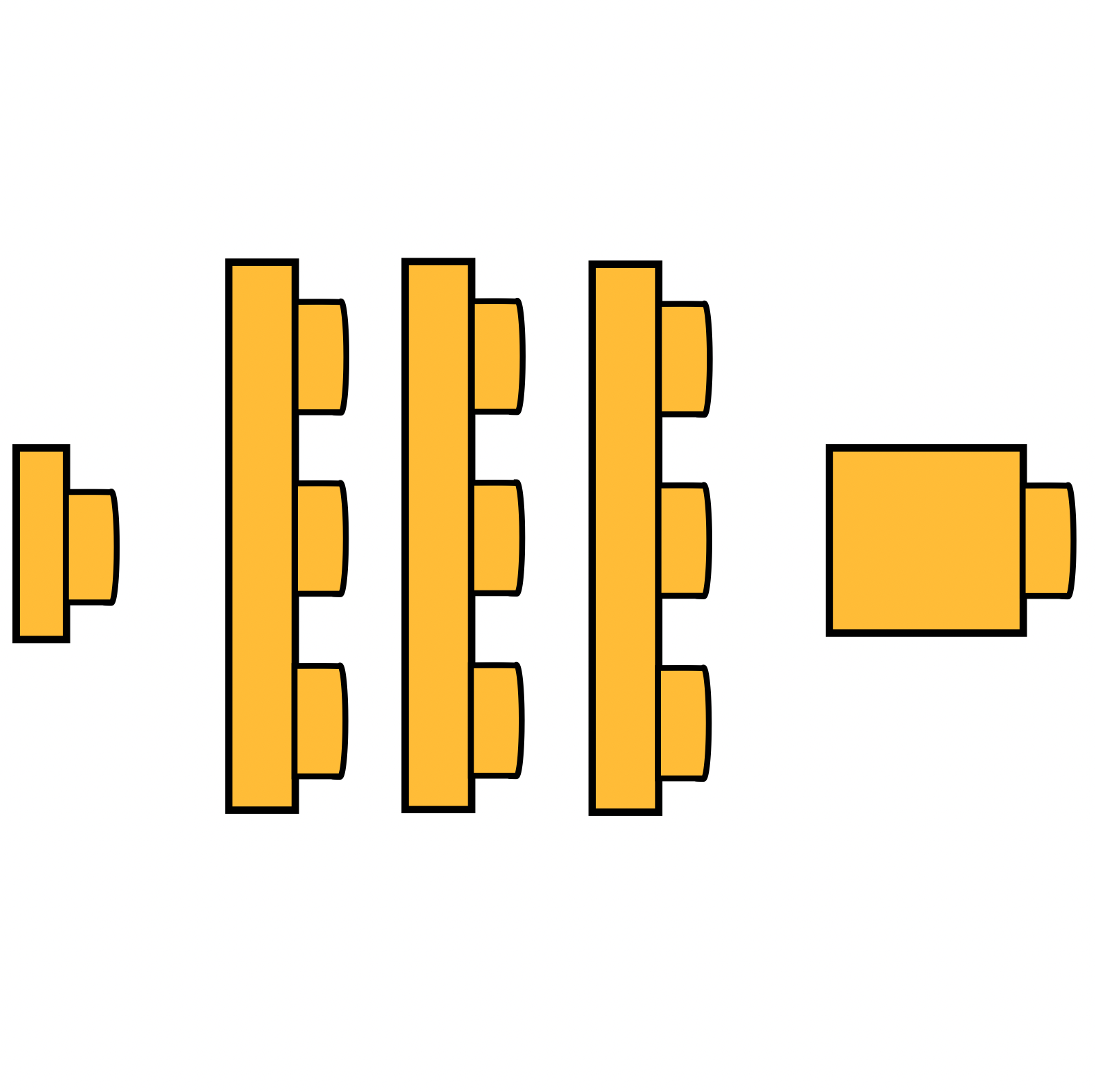

BlockSwap: Fisher-guided Block Substitution for Network Compression on a Budget

Jack Turner*, Elliot J. Crowley*, Michael O'Boyle, Amos Storkey, Gavia Gray

A fast algorithm for obtaining a compressed network architecture using Fisher information.

GPViT: A High Resolution Non-Hierarchical Vision Transformer with Group Propagation

ICLR 2023 (Accepted as a notable paper)

Chenhongyi Yang*, Jiarui Xu*, Shalini De Mello, Elliot J. Crowley, Xiaolong Wang

A new vision transformer architecture that serves as an excellent backbone across different fine-grained vision tasks.

Prediction-Guided Distillation for Dense Object Detection

Chenhongyi Yang, Mateusz Ochal, Amos Storkey, Elliot J. Crowley

A knowledge distillation framework for single stage detectors that uses a few key predictive regions to obtain high performance.

Neural Architecture Search without Training

Joseph Mellor, Jack Turner, Amos Storkey, Elliot J. Crowley

A low-cost measure for scoring networks at initialisation that can be used to perform neural architecture search in seconds.

Neural Architecture Search as Program Transformation Exploration

ASPLOS 2021 (Distinguished Paper)

Jack Turner, Elliot J. Crowley, Michael O'Boyle

A compiler-oriented approach to neural architecture search which can generate new convolution operations.

Bayesian Meta-Learning for the Few-Shot Setting via Deep Kernels

Massimiliano Patacchiola, Jack Turner, Elliot J. Crowley, Michael O'Boyle, Amos Storkey

A simple Bayesian alternative to standard meta-learning.

BlockSwap: Fisher-guided Block Substitution for Network Compression on a Budget

Jack Turner*, Elliot J. Crowley*, Michael O'Boyle, Amos Storkey, Gavia Gray

A fast algorithm for obtaining a compressed network architecture using Fisher information.

Thanks to Jack Turner and Chenhongyi Yang for the website template.